Beyond ChatGPT: AI That Actually Experiences the World

These machines are learning the hard way (just like us)

We've all played around with ChatGPT or watched AI create stunning artwork. It's pretty amazing what these systems can do just by crunching through massive amounts of text and images. But here's the thing – as cool as they are, they're missing something crucial: they've never actually touched anything or stumbled around in the real world like we do.

That's where embodied AI comes in, and it's honestly one of the most exciting frontiers in robotics right now.

Learning Like Humans Do

Think about how you learned to ride a bike. Did you memorize physics equations about balance and momentum? Of course not! You got on, wobbled around, probably scraped a knee or two, and eventually figured it out through pure trial and error. Your body, your senses, and the world around you all worked together to teach you.

This idea – that we learn through our bodies, not just our brains – is called embodied cognition. And it's exactly what researchers are trying to give robots.

Instead of just feeding machines data like we do with language models, embodied AI lets robots learn by actually doing stuff. They touch things, move around, mess up, and adjust – just like we do. It's learning through living, basically.

Why the Real World is So Damn Hard

Here's what makes physical learning way trickier than digital learning:

Real consequences: When ChatGPT makes a mistake, nobody gets hurt. When a robot messes up, it might break something (or itself).

Everything's messy: The real world doesn't come with neat labels. A robot has to figure out what's a chair, what's a threat, and what's just weird lighting – all while things keep changing around it.

No pause button: Unlike AI that can take its sweet time processing data, robots need to think and act on the fly. The world doesn't wait.

Goals keep shifting: What worked in the lab might be useless in your living room. Robots need to roll with the punches.

Your body shapes your mind: A robot with wheels experiences the world completely differently than one with arms and legs. The hardware literally changes how they can learn.

Today's Robots: Really Good at One Thing

Most robots today are basically very expensive, very precise tools. They're fantastic at doing the same thing over and over in controlled environments – like assembling cars or sorting packages. But throw them a curveball, and they're stuck.

These robots are kept safely away from humans because, frankly, they can't adapt when something unexpected happens. They need constant babysitting and can only work in their perfectly controlled bubble.

Robots That Learn by Doing

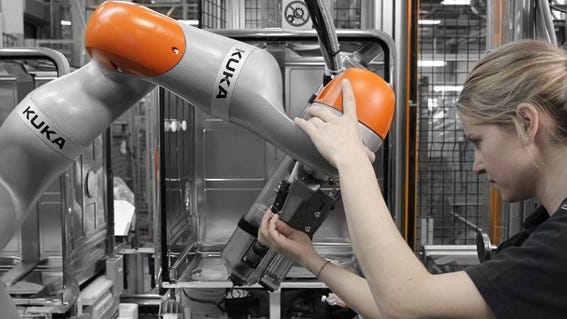

Robots are getting smarter about using their bodies to learn. It’s not new—scientists have explored this since the 1940s—but we’re finally seeing real breakthroughs. Some robots can now “feel” with touch sensors or learn by changing shape, like soft robots that adapt through physical deformation. At Toyota, robots learn new skills by watching humans, just like kids copy their parents. Cobots like KUKA’s LBR iiwa and LBR iisy safely work alongside people, learning from each interaction to improve. And the latest humanoid robots are figuring out the real world by walking around and learning as they go.

Could This Lead to True AI?

Here's where it gets really interesting. While language models are impressive, they're basically very sophisticated pattern-matching machines. They've never actually experienced cause and effect in the physical world.

Embodied AI could be our path to artificial general intelligence – AI that's truly adaptable and intelligent like humans. By grounding learning in physical experience, these systems might develop the kind of flexible, common-sense understanding that current AI lacks.

Think about it: every intelligent creature we know – from humans to dolphins to octopuses – learned about the world through their bodies. Maybe that's not a coincidence.

What's Next?

We're still figuring out the big challenges – keeping robots safe while they learn, making them adaptable enough for the real world, and getting them to learn fast enough to be useful. But the potential is huge.

Imagine robots that could actually help elderly people at home, adapting to each person's unique needs. Or rescue robots that could navigate disaster zones, learning and adapting as conditions change.

The weird thing is, while everyone's throwing money at language models and image generators, embodied AI is still pretty underexplored. That's actually exciting – it means there's so much room for breakthroughs.

The Bottom Line

Embodied AI isn't just about making better robots (though that's pretty cool too). It's about creating machines that understand the world the way we do – through experience, trial and error, and yes, even failure.

By giving AI systems bodies and letting them learn through physical interaction, we might finally bridge the gap between narrow, specialized AI and the kind of general intelligence that can truly adapt to our messy, unpredictable world.

And honestly? That future can't come soon enough.

References

Roy, Nicholas, et al. "From machine learning to robotics: Challenges and opportunities for embodied intelligence." arXiv preprint arXiv:2110.15245 (2021).

Paolo, Giuseppe, Jonas Gonzalez-Billandon, and Balázs Kégl. "A call for embodied AI." arXiv preprint arXiv:2402.03824 (2024).

Foglia, Lucia, and Robert A. Wilson. "Embodied cognition." Wiley Interdisciplinary Reviews: Cognitive Science 4.3 (2013): 319-325.